Random stuff I feel like writing about including stories from my game development career, new tech ideas and whatever else comes to my mind.

Thursday, November 22, 2012

Planetary Annihilation Engine Architecture Update #1

You can preorder PA at the Uber Store

So I'm going to ramble on a little bit here about the engine architecture for the UI of Planetary Annihilation. This is by no means a completely description, just whatever I feel like rambling about while watching the turkey get prepped.

Game UI systems are, generally speaking, a disaster. You have quite a few choices but none of them are really great. Building UI is difficult and time consuming. Combine that with whacky tools and you can end up in a pretty bad place. Furthermore one of our primary design goals it the ability for end users to mod and improve the UI, preferably in a way that allows mods to work together with each other.

What that really means is that we can't just bake the UI into some C++ code. Even a DLL system would have issues because we are cross platform. We need a flexible and extensible system for building UI that's available everywhere. Having something that is commonly used and that has a set of existing tools is also a bonus. In addition since so much of our UberNet infrastructure is web based it's required that we have a way to embed a web browser into the game client.

So given all of that, what's a logical system for us to use? We could certainly build a custom system and embed lua for example. Or we could license a 3rd party library like Scaleform and use Actionscript.

Or we could do the obvious thing and simply use a web browser as the UI system. So that's what we've chosen to do. Basically the client is a transparent web browser (webkit) window over the top of an OpenGL context. All of the front end UI and the top level game UI is being written in Javascript. Javascript while not my favorite language by any stretch has the advantage of being the most popular language on earth. It has suites of tools dedicated to it. It's got a fairly decent JIT.

So the basic idea here is that the engine exposes a set of javascript interfaces. The web browser draws all of the UI elements and also controls issuing orders, selection etc. By exposing more interfaces we can give the javascript more control and I intend to expose more than we actually use. The planetary editor is also written the same way which means it's extensible by the community as well. In fact the planetary editor is the first real UI we are doing with the system.

Early shot of some planet editor UI. Please don't consider this a style guide for the UI as this is all preliminary in terms of look and feel. Normally we would never show stuff this early but the rules have changed due to Kickstarter. The functionality in this interface will probably change dramatically over time etc etc disclaimer etc.

Overall I'm happy with this decision so far and we've already got this running on Windows/MacOS/Linux. Over the medium term I do still have some concerns about performance and how sick of a lanuage js is. In reality though I think this is going to be the way that many games do UI going forward as the web becomes ubiquitos.

I'm going to start a thread on our Forum to discuss this.

Sunday, November 18, 2012

Augmented Reality Movie

Just a quick note that if you are interested in AR you need to check out the short film Sight.

It's not perfect by any stretch but it's a decent visualisation of what life could be like with AR. The social impact of this technology is potentially very large.

Saturday, September 29, 2012

Engine Research

I've been in full-on engine research mode since the Kickstarter ended. Up to this point it's been mostly high-level engine design type stuff. William (main architect of the engine) and I have spent a decent amount of time hashing out different architecture stuff. For example how are we going to handle implementing the UI and support UI modding? What do the servers look like? How do we handle replays and savegames? How are units and orders represented? Coordinate spaces? Unit ids? Etc.

I've mainly been concentrating on the graphics side. Putting together all of the pieces and finalizing the design goals. I have a fairly plausible complete plan at the moment for everything with appropriate fallbacks. As I get happy with the implementation I'll talk more about the details of this stuff.

Thursday, September 13, 2012

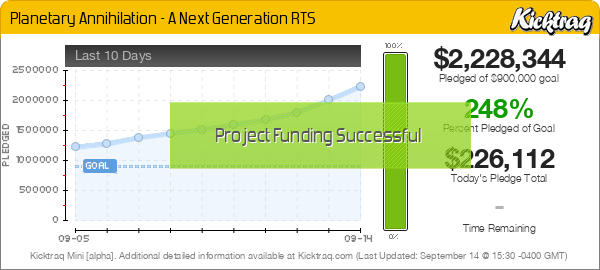

Two Million!

Wow, I'm shocked and amazed but we just crossed the $2M line on our Kickstarter.

A huge thanks to everyone out there that contributed and spread the word. Astounding!

Friday, August 31, 2012

PAX 2012

This is the first time in a couple of years that we haven't had a PAX booth. I'm going to spend some time walking around the floor and go to some meetings. Not having to work it is kind of a nice change. Although harder to get in without a booth!

Thursday, August 30, 2012

1 million dollars?

Looks like we are rapidly headed towards 7-figure land. The more we get the better a game we get to make. Looks like it's happening!

On a side note I may start blogging about the things I'm coding on a bit more as we proceed with this project.

Monday, August 27, 2012

800k!!

Well we've managed to hit 800k in less than two weeks. I've been crazy busy answering questions, doing interviews and starting to work more heavily on the technical design of the game.

The support so far has been amazing. The community is self organizing. Fan art has already started to pour in. Basically it seems like people want the game. Fantastic!

Wednesday, August 22, 2012

I've been a bit silent

I've been a bit silent mainly because I've been working on Planetary Annihilation. Now that the kickstarter is up I'm double busy trying to keep up with the community. So far the game seems to be being received well and I think we are on track to make something awesome.

Wednesday, July 11, 2012

David Brin's "Existence"

I actually took a bit of a vacation last week (fairly unusual these days) and had a chance to do some reading. The timing was good as the book was just released.

All I can say is WOW. It took me a while to get through this sucker because it's just densely packed with ideas from the future. The book takes place in about 2050 or so when a lot of our technology that's ramping up now (like Augmented Reality) is simply a part of normal existence. It's simply page after page of Brin's astounding thoughts on what society could look like in the near future given current trends. And of course it has a larger theme but read the book before spoiling it.

I would highly recommend Brin to anyone who thinks about the future or who works in this kind of technology.

I also read "Red Shirts" by John Scalzi which is a fun easy red. It's about these guys on a starship who get all meta and realize that they are the red shirts and are going to die. Quite funny, especially if you get trek.

All I can say is WOW. It took me a while to get through this sucker because it's just densely packed with ideas from the future. The book takes place in about 2050 or so when a lot of our technology that's ramping up now (like Augmented Reality) is simply a part of normal existence. It's simply page after page of Brin's astounding thoughts on what society could look like in the near future given current trends. And of course it has a larger theme but read the book before spoiling it.

I would highly recommend Brin to anyone who thinks about the future or who works in this kind of technology.

I also read "Red Shirts" by John Scalzi which is a fun easy red. It's about these guys on a starship who get all meta and realize that they are the red shirts and are going to die. Quite funny, especially if you get trek.

Friday, June 15, 2012

Stargate Continuum

I'm definitely a fan of Stargate but my viewing trailed off when a lot of the characters that I like left. I've recently been catching up a bit and watched the Ark of Truth a while ago. I was kind of disappointed to be honest.

So I wasn't totally stoked to check out Continuum. Holy shit batman was I ever wrong. Continuum was like an old school Stargate episode. Hammond of Texes? Check. O'Neill? Check. System Lords? Check. Time Travel? Check. Basically I enjoyed every single minute of it. It makes me sad I've already seen all of the old ones. I don't usually rant about this stuff but man I just loved it.

So kudos to the Stargate people for making a great show. Yes it's Star Trek in a bunker but damn it was good.

So I wasn't totally stoked to check out Continuum. Holy shit batman was I ever wrong. Continuum was like an old school Stargate episode. Hammond of Texes? Check. O'Neill? Check. System Lords? Check. Time Travel? Check. Basically I enjoyed every single minute of it. It makes me sad I've already seen all of the old ones. I don't usually rant about this stuff but man I just loved it.

So kudos to the Stargate people for making a great show. Yes it's Star Trek in a bunker but damn it was good.

Wednesday, May 23, 2012

World Building Through Game Context

I was excited to see this piece by Patricia Hernandez over at Kotaku because she's really understood what we were trying to do with the world building in Super Monday Night Combat. Her article outlines it well but the basic idea is to describe the world without sticking it right into your face as an intro video. You learn about the dystopia by inferring what's going on outside the game through the context of (mostly) the announcers. Some novels take a similar approach to revealing a world through actions and dialog without long descriptions of world background. I'm glad people are appreciating this aspect of the game because I love the world of SMNC. We do owe a bit of a debt to Idiocracy one of the greatest movies ever made IMHO.

For the record the fantastic Ed Kuehnel does almost all of the writing in MNC and SuperMNC. If you need some writing done he's your guy.

For the record the fantastic Ed Kuehnel does almost all of the writing in MNC and SuperMNC. If you need some writing done he's your guy.

Sunday, May 13, 2012

RTS UI Philosophy

When I get to talking about RTS games with either fans or other people in the industry they often ask me what my favorite RTS is. Now the first one that I put any real time into was the original C&C which was just a genius of a game. But C&C suffered from the same issue as Warcraft and it's successors. That issue is simply that the interface is designed to be part of the game experience. Micro play and control of individual units is commonplace in these games and the emphasis is more on tactics that huge armies. Limitations on numbers of units selected create a command and control scaling problem. I've heard designers of these games talk about these issues and most of them consider the UI to be part of the game itself. They want to limit what the players strategic options are by making the UI more difficult to use in some cases.

To me this is the fundamental difference between games like TA and SupCom and most other RTS games. The philosophy is to make the interface as powerful as possible so that the player can concentrate on strategy, not on having to quickly hit buttons. Micro is discouraged by giving the player the ability to automate control as much as possible. Huge battles are only possible if you can actually control huge numbers of units. Things like factor queue automation, automatic orders when units are built, automatically ferrying carriers, sharing queues between factories and all kind of other things made it into SupCom. Shift-click in TA was the original simple version of all this stuff and by itself was pretty powerful.

Now that's not the only difference but I think this is one of the major things that differentiates these games from one another. I want huge fucking battles! Let me build 10,000 units and throw them against the enemies buzzsaw!

Anyway I've been thinking a lot about RTS stuff lately now that Super Monday Night Combat is out. I have some very specific ideas about what the next generation of RTS looks like that I would like to put into motion. More on that later...

To me this is the fundamental difference between games like TA and SupCom and most other RTS games. The philosophy is to make the interface as powerful as possible so that the player can concentrate on strategy, not on having to quickly hit buttons. Micro is discouraged by giving the player the ability to automate control as much as possible. Huge battles are only possible if you can actually control huge numbers of units. Things like factor queue automation, automatic orders when units are built, automatically ferrying carriers, sharing queues between factories and all kind of other things made it into SupCom. Shift-click in TA was the original simple version of all this stuff and by itself was pretty powerful.

Now that's not the only difference but I think this is one of the major things that differentiates these games from one another. I want huge fucking battles! Let me build 10,000 units and throw them against the enemies buzzsaw!

Anyway I've been thinking a lot about RTS stuff lately now that Super Monday Night Combat is out. I have some very specific ideas about what the next generation of RTS looks like that I would like to put into motion. More on that later...

Thursday, May 10, 2012

Analog was cool

I was thinking a little bit about how old photographs have really decent image quality. In fact other than the color issue they can pretty much have as much detail as typical modern photos. Why? Because analog technology works really well for capturing sound and images. It's really a great hack that "just works" for a lot of things. Think about photos. It's taken us until basically the last decade to get digital cameras that are good enough. Do you have any idea how much processing is going on inside a typical digital camera? To match analog pictures you basically need megapixels worth of data. We had to invent good compression algorithms, ccd sensors with high resolution and decent microprocessors to match a few mixed up chemicals soaked into some paper (or on glass).

Another thing to think about is sound. Edison basically started with a piece of tinfoil and a needle. The technology of analog sound is fairly trivial. Extending it to electric analog is also fairly trivial. Saving that sound to something like tape is a bit more work but in the scheme of things is also trivial. These are easy to bootstrap technologies that from a cost effectiveness standpoint blow away digital. Analog tech has huge bang for the buck.

Of course once you get your digital stuff bootstrapped to where we have it now we start to see enormous advantages over analog. Perfect copies sent instantly anywhere in the world is a good start. We can store enormous amounts of information in a small space now. Imagine lugging around your record collection! I knew people that had walls off tapes so they could have a large music collection. Those are the people that now have terabytes of MP3's. So some other advantages is that the cost of an individual piece of data is reduced to almost free. Pictures used chemicals, paper and other real resources. With digital you can take as many shots as you want and preview them right away. Being able to transfer images to a computer is nice as well. Backing up your photos online can save your family pictures from a disaster. You can share your pictures with more people. The list of advantages to digital is basically never ending.

So just think about and remember how far we've come with digital technology. We've literally gone from Kilobytes to Megabytes to Gigabytes to Terrabytes and almost beyond in the last 30 years. A modern chip is basically the complexity of a city shrunk down to the size of your finger nail. My first PC had 4k of RAM. Nowadays a typical PC has Gigabytes of RAM, multiple processors and a secondary GPU that may be pushing teraflops of processing power.

I had the entire source code and all of the assets of the first game I worked on emailed to me a while back. It was 19MB. More on Radix later...

Another thing to think about is sound. Edison basically started with a piece of tinfoil and a needle. The technology of analog sound is fairly trivial. Extending it to electric analog is also fairly trivial. Saving that sound to something like tape is a bit more work but in the scheme of things is also trivial. These are easy to bootstrap technologies that from a cost effectiveness standpoint blow away digital. Analog tech has huge bang for the buck.

Of course once you get your digital stuff bootstrapped to where we have it now we start to see enormous advantages over analog. Perfect copies sent instantly anywhere in the world is a good start. We can store enormous amounts of information in a small space now. Imagine lugging around your record collection! I knew people that had walls off tapes so they could have a large music collection. Those are the people that now have terabytes of MP3's. So some other advantages is that the cost of an individual piece of data is reduced to almost free. Pictures used chemicals, paper and other real resources. With digital you can take as many shots as you want and preview them right away. Being able to transfer images to a computer is nice as well. Backing up your photos online can save your family pictures from a disaster. You can share your pictures with more people. The list of advantages to digital is basically never ending.

So just think about and remember how far we've come with digital technology. We've literally gone from Kilobytes to Megabytes to Gigabytes to Terrabytes and almost beyond in the last 30 years. A modern chip is basically the complexity of a city shrunk down to the size of your finger nail. My first PC had 4k of RAM. Nowadays a typical PC has Gigabytes of RAM, multiple processors and a secondary GPU that may be pushing teraflops of processing power.

I had the entire source code and all of the assets of the first game I worked on emailed to me a while back. It was 19MB. More on Radix later...

Saturday, May 5, 2012

Computing as a commodity

The market for personal computing has been evolving rapidly in the last decade. The modern era really began in the 70's with computers like the Apple ][ or the Commodore Pet. Well the Altair as well but that was true hobbyist level stuff. The Apple pretty much anyone who was a little geeky could do neat things with.

My first computer was a TI-99/4A which was kind of a weird hybrid game console / PC. It had a lot of expansion possibilities but mine was bare bones. You could buy game cartridges for it but I was poor and only had a few, fairly lame, games. Some kind of math asteroid blaster thing, a MASH game and their Pac-Man clone called Munch Man.

Anyway not to digress too much but I bring this up for a reason. If you look at the computers of that era, including the PC when it arrived, you pretty much had to type things at a prompt to do anything. In the case of the TI it booted up to a BASIC prompt. If you weren't interested in programming these machines would probably be kind of boring. But, if you could program the machine you could do utterly astounding things! It opened up an entire new world to me at the time. But realistically only a small portion of the population is going to want to deal with that kind of thing. It wasn't accessible in the way that something like an iPad is.

The reason I bring this up is to point out that nowadays grandma and grandpa are using computers. They have very specific needs like browsing the web and of course playing games. Now when you think about the form factor of a computer there are different tradeoffs in an iPad than a regular PC. For example there is no hard keyboard on the iPad. For someone who is a hunt and peck typist they may actually increase typing speed on the iPad vs a keyboard. For most of the population a real keyboard may simply be a waste of time, especially if they don't have a reason to type much. Dictation, for example, has gotten a lot better and will improve more in the future making typing redundant for twitter updates and email.

Now consider the professional programmer like myself. I often use command line tools and write small applications in python for processing data. I can type 100 wpm on a "real" keyboard. I can't imagine trying to get serious programming work done on an iPad. I would type a lot more slowly and I also need a lot of screen real estate when coding and debugging. The usage case for coding is just insanely different than what 99% of people need to do on their computer. Even for me I could get away with an ipad for almost everything.

So what are the implications here? To me it seems simple. We are going to see a split between consumers of content and creators in terms of what kind of computers we use. Most content consumption will happen on something like an iPad. Lot's of content creation will also happen because you can create neat stuff on them. But for hardcore application development you will see niche operating system environments that most people don't use. It's entirely possible that the open desktop version of windows eventually withers and programmers move over mostly to linux. Hell at places look Google you have to look hard to find a windows box.

I think there are some other interesting implications as well. For a long time I thought that the generation after mine was going to have so many brilliant programmers that all of us vets would be out of a job and outdated. Well we surely do have some brilliant young talent around but not in anywhere near the numbers I would expect. I don't think it's lack of actual talent, I think it's that the computing environment we have now isn't as conducive to easing into programming as it was back in the day. Kids learn how to use windows and browse the web but finding a way to program something is kind of daunting. BASIC programs that ran at a command line were easy to learn and experiment with. Of course this stuff still exists but you have to seek it out. There are simply so many things to waste time on (reddit) that why would anyone get around to programming unless they really cared about it? You could spend your entire life playing games that are free! Or get sucked into WoW...

Anyway I guess the conclusion here is that we are going to see a further separation of development tools and general computing. Desktop type power user computing will become more niche as most people use tablets or their cable box to get at the content they want. Hopefully we will find ways to expose young people to programming so that we can have future generation of software creators.

One other thing I would like to point out is that all of this could change quickly. For example there are several projects now underway that are pushing wearable computing. It's possible that new input methods, being connected to the net 24/7 and the ability to overlay AR type stuff might allow programming environments significantly more powerful than we have now. I fully expect within a decade for eye goggles capable of AR to be as commonplace as an iPhone is now.

I'm not claiming any crystal ball, just calling it as I see it, like usual..

My first computer was a TI-99/4A which was kind of a weird hybrid game console / PC. It had a lot of expansion possibilities but mine was bare bones. You could buy game cartridges for it but I was poor and only had a few, fairly lame, games. Some kind of math asteroid blaster thing, a MASH game and their Pac-Man clone called Munch Man.

Anyway not to digress too much but I bring this up for a reason. If you look at the computers of that era, including the PC when it arrived, you pretty much had to type things at a prompt to do anything. In the case of the TI it booted up to a BASIC prompt. If you weren't interested in programming these machines would probably be kind of boring. But, if you could program the machine you could do utterly astounding things! It opened up an entire new world to me at the time. But realistically only a small portion of the population is going to want to deal with that kind of thing. It wasn't accessible in the way that something like an iPad is.

The reason I bring this up is to point out that nowadays grandma and grandpa are using computers. They have very specific needs like browsing the web and of course playing games. Now when you think about the form factor of a computer there are different tradeoffs in an iPad than a regular PC. For example there is no hard keyboard on the iPad. For someone who is a hunt and peck typist they may actually increase typing speed on the iPad vs a keyboard. For most of the population a real keyboard may simply be a waste of time, especially if they don't have a reason to type much. Dictation, for example, has gotten a lot better and will improve more in the future making typing redundant for twitter updates and email.

Now consider the professional programmer like myself. I often use command line tools and write small applications in python for processing data. I can type 100 wpm on a "real" keyboard. I can't imagine trying to get serious programming work done on an iPad. I would type a lot more slowly and I also need a lot of screen real estate when coding and debugging. The usage case for coding is just insanely different than what 99% of people need to do on their computer. Even for me I could get away with an ipad for almost everything.

So what are the implications here? To me it seems simple. We are going to see a split between consumers of content and creators in terms of what kind of computers we use. Most content consumption will happen on something like an iPad. Lot's of content creation will also happen because you can create neat stuff on them. But for hardcore application development you will see niche operating system environments that most people don't use. It's entirely possible that the open desktop version of windows eventually withers and programmers move over mostly to linux. Hell at places look Google you have to look hard to find a windows box.

I think there are some other interesting implications as well. For a long time I thought that the generation after mine was going to have so many brilliant programmers that all of us vets would be out of a job and outdated. Well we surely do have some brilliant young talent around but not in anywhere near the numbers I would expect. I don't think it's lack of actual talent, I think it's that the computing environment we have now isn't as conducive to easing into programming as it was back in the day. Kids learn how to use windows and browse the web but finding a way to program something is kind of daunting. BASIC programs that ran at a command line were easy to learn and experiment with. Of course this stuff still exists but you have to seek it out. There are simply so many things to waste time on (reddit) that why would anyone get around to programming unless they really cared about it? You could spend your entire life playing games that are free! Or get sucked into WoW...

Anyway I guess the conclusion here is that we are going to see a further separation of development tools and general computing. Desktop type power user computing will become more niche as most people use tablets or their cable box to get at the content they want. Hopefully we will find ways to expose young people to programming so that we can have future generation of software creators.

One other thing I would like to point out is that all of this could change quickly. For example there are several projects now underway that are pushing wearable computing. It's possible that new input methods, being connected to the net 24/7 and the ability to overlay AR type stuff might allow programming environments significantly more powerful than we have now. I fully expect within a decade for eye goggles capable of AR to be as commonplace as an iPhone is now.

I'm not claiming any crystal ball, just calling it as I see it, like usual..

Saturday, April 28, 2012

Let's Talk Procedural Content

Procedural content has been a buzz word for a while now. The

concept isn’t new but people seem to be using it a bit more than in the

past. Plenty of games have used

procedural techniques in different areas.

For example in TA we used a tool called Bryce to generate

landscapes. Diablo and Minecraft used it

for worlds. Many other examples exist.

When this is brought up, people invariably think that if you

are using procedural tools that your entire game has to be procedural. Forget about trying to do that. What I want to

talk about is using procedural tools as part of the asset creation process to

enhance the power of the tools that we already use. Think about it as poly modeling with really

advanced tools.

The end goal here is to allow artists to create things

quickly and efficiently, customize them and save them off for use in the

game. This doesn’t imply that the

procedural part has to be done at game runtime; these tools purely exist as a

way for the artists to express themselves.

This has a few benefits including fitting into current pipelines and

being able to use procedural techniques that are extremely processor intensive. Pixar doesn’t send out the data they use to

render their movies, they send out final rendered frames. Same idea.

Sure being able to do it at runtime has some nice things going for it

but it’s not always necessary in every case.

There are a bunch of issues with creating the procedural

models themselves and I’ll talk about that some other time. Let’s just assume for a minute that we do

have some procedural models that we want to use. By models I mean something that takes a set

of inputs and gives a processed output.

For example a procedural forest model would have things like type of

tree, density of trees, overall canopy height etc and would generate trees,

bushes etc in some area you’ve defined within your world. A procedural terrain model would allow you to

specify what the ground was made of, how hilly it is etc.

So let’s start out with tessellated terrain that’s slightly

hilly. In your head basically just

picture some slightly rolling hills that are about a square kilometer. Now let’s select that terrain and create a

procedural forest model that overlaps it.

We set the canopy to about 50ish feet, set the density fairly high and bam;

we have a forest covering our terrain. I’m

sure someone’s engine already does this so I’m not really taking a leap here.

Ok, the next thing is to find a nice flat area because I want

to put down a building. If we don’t have

a flat area we go back and fix that at the terrain generation stage. The forest will automatically regen to

conform to the terrain because we can always “re-run” the procedural simulation

if we store them off (duh).

We have our flat area covered by forest because we selected

the entire terrain. Simply select

the blank out area or whatever you want to call it tool and start erasing part

of the forest model to create a clearing.

All of this erasing is itself a procedural model that’s being applied as

a construction step. It’s storing off

the idea that the area should be cleared so that if we re-generate with

slightly different parameters we still get the same basic result, a clearing in

the forest. Of course if we modify the

initial forest parameters we can easily change the type, density etc. of the

forest.

Now that we have a hilly forest with a flat clearing in the

middle we can start to add interesting game-play stuff. The forest itself is really a fancy backdrop

but we can customize any particular element of to our specific needs. So the next thing I want to add is a concrete

bunker that has some shiny new metal parts on it including a door, various

panels, bolts, pipes etc. This bunker

could itself be a form of procedural model (e.g. a specialization of procedural

buildings) or it could be a bespoke item created for game play purposes. Either way picture us plunking down a

concrete bunker here.

Now we have a dense forest on rolling hills with a concrete

bunker in the middle of a clearing. Let’s get started on the actual interesting

stuff! The next level of procedural generation past this is to start doing

serious simulation on the world. This is

going to require a format that is flexible and allows both the textures and

geometry of the world to be extensively modified. There are a lot of different formats that

could be tried and as long as we can convert between them we can come up with

different representations for different algorithms. Some sort of voxel type format might make a

lot of sense for all of the models but I don’t want to make any particular

assumption about the best way to do it.

First off let’s make it rain. We set the procedural weather model to be

rainy (skycube to match!). We literally

simulate water coming down from the sky.

We see where it bounces; we potentially let it erode dirt. We let it form channels and see where it

flows to. We can run this simulation for

a little bit of time, or a lot. We can

have a lot of rain, or a little. The

longer we run it the more extreme effects it has. For example it could start to rust and streak

any metal. Picture our shiny bunker

getting long streaks of rust running down from the panels and bolts. Picture the rain causing erosion as it goes

down the hills and potentially forming lakes, puddles and streams. At any

point we can “lock in” the simulation to capture how full the streams and lakes

are etc. The point being we’ve taken a

very process intensive process and applied it to try and get a lot of bespoke

looking stuff without having to model these processes in the head of an artist.

Now let’s go back to our forest. We started with a simple model that generated

a dense forest to make life easy. But is

there any reason that a forest aging model couldn’t be applied to the forest at

the same time we are simulating the rain?

For example trees that are near water could grow larger. Areas with sparse water could clear and

eventually switch to other types of vegetation.

We could simulate sunlight and plant growth patterns. We know how to do shadows pretty well, let’s

simulate the sun moving across the sky and see which patches get more sunlight.

Picture our streaked bunked with grass starting to grow around it, vines

snaking up the side, bushes growing up beside it and having their shape affected

by the structure. If you ran it long

enough maybe the forest itself would start to intrude on the structure and

possibly even crack the concrete structure over time to create a ruin. As we improve our physics model we can

certainly do things like finite element analysis to figure out and simulate breakage.

Are you starting to understand the power of this kind of modeling? We don’t need to be perfect, just create

models that have interesting effects and combine them. This could be really cool even when simply

done. Keep in mind I’m not taking any real leaps here, most of this is based on

stuff I’ve seen happening already. I’ve

seen water erosion in real-time, I’ve seen procedural forests, I’ve seen rust

streaking. What we really need is a structure

to build all of this stuff on top of.

The majority of the actual work is going to be defining all of the procedural

models. But that’s something that can be centrally done. Once people have created those models they

can be re-used and upgraded in quality over time.

If we could define an extensible procedural model format

that could plug into multiple tools we would really have something. This is an

ongoing area of research, for example GML. A common net repository of models that anyone could

contribute too that had standard (and actually usable) license terms would mean

we could all benefit. You can still build whatever bespoke stuff you need for

your game but the time wasting work that’s the same every time could be made

easier.

All that I’ve said here is a straightforward application of

stuff we can already do. There are

numerous products out there headed in this direction right now. CityEngine and SpeedTree are just some of the

examples among many.

Obviously there is a lot of research to be done on this

stuff going forward. I foresee more

research on how best to represent these models, better simulation tools and

lots of specialty models that do a domain specific job.

Speaking of specialty models human characters are already

widely created using procedural models.

A lot of game companies have a custom solution to this and they all have

different strengths and limitations.

This could go away if we have a good commercial package that was a high

quality implementation. It would create

humans out of the box but the algorithms could be customized and extended (or

downloaded from someone else who has done that). There is simply no reason that this needs to

be reimplemented by every game company on earth. It’s an active area of research already

obviously. Of course creating the

geometry is hard, getting it to animate is harder. But we’ll crack it eventually.

There are plenty of examples where specialized models would

come in handy. This would enable creation of all kinds of animals and plants, specific

types of rock etc. Hopefully the standard modeling language would

be expressive enough to do most normal things.

Domain specific ways of thinking about particular models will probably

be necessary in some (a lot?) of cases.

A long as we have a well defined environment for these models to

interact in I think we can get away with a lot of hacky stuff at first.

If you are going to have a procedural forest it would make

sense to have it include animals. Deer,

bears, cattle, birds or whatever could be modeled and then given time based

behavioral simulation. There is already

precedent for actual mating of models so it’s possible we could simulate

multiple generations and actually evolve new populations. This level of modeling is getting pretty

sophisticated but I don’t see any intrinsic limitation.

Once you have a forest populated with procedural animals you

can consider extending these algorithms ever further. For example you could simulate the animal

populations and their effects on the world so that over time you converge to

some interesting results. That natural

cave now has a bear living in it. Lakes

and streams have fish that have evolved to use particular spawning grounds. Areas trampled often by animals could have

less vegetation. Grazing areas and other

types of foliage could be eaten or even spread by the animal population moving

seeds about. Most of the behaviors I’m

describing could be modeled using simple techniques that would still probably produce

neat results. For example to model

foliage destruction you could use a heat map of where the simulated animals

walked. Look for seeds sources and

spreads them across the heat map graph using high traffic areas as

connections. Then have those seeds

germinate over time based on all of the other factors we’ve talked about.

Now I’ve used a forest as an example here but that’s just

one type of operator. There will be meta

operators at every level here that feed into and effect one another. For example you could do a planetary

generator that runs a meta simulation to decide where to place forest

operators. Oceans in tropical areas near

shorelines could generate coral reefs operators. The weather operator combined with elevation

would define temperature which could feed into other processes like snow level. I can see people creating a ton of custom

operators that when combined together do fascinating things. Volcanoes that shape the landscape. Craters that are created through simulated

bombardment and then eroded. Plate

tectonics to shape continents? The ideas

are endless if we have a framework to play around in.

Pie in the sky? Let’s

check back in 2040 and laugh at the primitive ideas presented here. Or maybe our AI assistant will laugh for us.

Just wait until I start talking about augmented reality modeling

tools. But that’s another story…

Friday, April 27, 2012

Strange Happenings at the Circle-K

I couldn't figure out a title for this one. It's just a random story from when we were working on Radix.

We needed to outsource some of the art because we were mostly coders. Specifically we were looking for work on textures, the main ship and enemies.

So a couple of guys we were working with hooked us up with these brothers. These two guys had been doing some playing around with 3d studio, photoshop and scanning slides to make cool images. They were, I think, primarily interested in doing web type work. Gaming always seems to attract people though and they definitely seemed excited about being involved with a game project.

Anyway, we meet them for the first time and talk about the project. They didn't have any kind of 3d model portfolio or anything because they had never done it before. This should have been a red flag, but back then everything was new and it was kind of hard to tell if someone could do something or not. So we agreed with them that we would meet up again in a couple of weeks and they would create some demo stuff for us. Specifically they were going to bring some frames of animation of the main ship flying and banking so we could get something better looking into the game, as a test.

So a week or two afterwards we all meet up at the office (not our office, our friends office) and they have a disk with them. We are all excited to see what they've come up with. We insert the floppy (!!) into the drive, list the files and they look good. At the time we used Autodesk Animator for all of our texture needs which used the .cel format natively. We load up AA and try to load the first file.

AA pops up a message that says "corrupt file" and doesn't want to load it. Dammit, we thought. A bad disk. Let's try the next file. Same problems. Etc for about all 8 files.

Keep in mind we were excited to see this stuff, we'd been waiting for weeks! So we copy the files to the hard drive and take a closer look. At this point we slip off to go have lunch with the guys. I think it was $1 big macs or something because I seem to recall a giant stack of big mac boxes. While we are away one of our coders was looking at the files. Now you have to realize we used this format in our game engine so we knew what the header was supposed to look like. In fact we used this format so much that we didn't even have to look at it in the debugger, we could literally interpret the files in a hex editor just by looking at them.

It was an early version of this format. As you can see viewing this in a hex editor it would be pretty apparent if there was a problem. You would expect a size number that was relatively close to the width*height with the header and the palette. The magic number would be clearly visible as well as the width and the height. In this case they should have been something like 128x128 (0x80).

Instead what we saw was something like:

20 20 20 20 20 20 20 20 20 20 20 20 20 20 with the rest looking like it was valid. E.g. it looks like there was actual image data in the file later but the header was stomped with 0x20 over and over. What kind of possible bug could have caused this bizarre stomping, which was slightly inconsistent from file to file to happen?

Before we got any further worrying about that we sent the Brothers home so we could talk about it and look at the files more. Once they were gone we simply rebuilt the correct header and got them loading into AA. It seem became apparent what had happened. Basically they did contain some rendered ship frames, but they were utterly atrocious. Yes, we did recover them correctly, they were just really shitty looking. Our theory was that they purposefully corrupted the files to make it look like they had made the deadline because they wanted more time.

We didn't have much patience for that kind of bullshit so we told them not to bother. I remember the conference call where we confronted them and we had it right on the money.

Eventually we ended up having the guys we were working with (more about them later) do most of the art. We also outsourced quite a bit to Cygnus Multimedia but they were pricey and it was hard to get the exact results we wanted.

Anyway chalk it up to one more learning experience. Sometimes bizarre shit happens.

We needed to outsource some of the art because we were mostly coders. Specifically we were looking for work on textures, the main ship and enemies.

So a couple of guys we were working with hooked us up with these brothers. These two guys had been doing some playing around with 3d studio, photoshop and scanning slides to make cool images. They were, I think, primarily interested in doing web type work. Gaming always seems to attract people though and they definitely seemed excited about being involved with a game project.

Anyway, we meet them for the first time and talk about the project. They didn't have any kind of 3d model portfolio or anything because they had never done it before. This should have been a red flag, but back then everything was new and it was kind of hard to tell if someone could do something or not. So we agreed with them that we would meet up again in a couple of weeks and they would create some demo stuff for us. Specifically they were going to bring some frames of animation of the main ship flying and banking so we could get something better looking into the game, as a test.

So a week or two afterwards we all meet up at the office (not our office, our friends office) and they have a disk with them. We are all excited to see what they've come up with. We insert the floppy (!!) into the drive, list the files and they look good. At the time we used Autodesk Animator for all of our texture needs which used the .cel format natively. We load up AA and try to load the first file.

AA pops up a message that says "corrupt file" and doesn't want to load it. Dammit, we thought. A bad disk. Let's try the next file. Same problems. Etc for about all 8 files.

Keep in mind we were excited to see this stuff, we'd been waiting for weeks! So we copy the files to the hard drive and take a closer look. At this point we slip off to go have lunch with the guys. I think it was $1 big macs or something because I seem to recall a giant stack of big mac boxes. While we are away one of our coders was looking at the files. Now you have to realize we used this format in our game engine so we knew what the header was supposed to look like. In fact we used this format so much that we didn't even have to look at it in the debugger, we could literally interpret the files in a hex editor just by looking at them.

It was an early version of this format. As you can see viewing this in a hex editor it would be pretty apparent if there was a problem. You would expect a size number that was relatively close to the width*height with the header and the palette. The magic number would be clearly visible as well as the width and the height. In this case they should have been something like 128x128 (0x80).

Instead what we saw was something like:

20 20 20 20 20 20 20 20 20 20 20 20 20 20 with the rest looking like it was valid. E.g. it looks like there was actual image data in the file later but the header was stomped with 0x20 over and over. What kind of possible bug could have caused this bizarre stomping, which was slightly inconsistent from file to file to happen?

Before we got any further worrying about that we sent the Brothers home so we could talk about it and look at the files more. Once they were gone we simply rebuilt the correct header and got them loading into AA. It seem became apparent what had happened. Basically they did contain some rendered ship frames, but they were utterly atrocious. Yes, we did recover them correctly, they were just really shitty looking. Our theory was that they purposefully corrupted the files to make it look like they had made the deadline because they wanted more time.

We didn't have much patience for that kind of bullshit so we told them not to bother. I remember the conference call where we confronted them and we had it right on the money.

Eventually we ended up having the guys we were working with (more about them later) do most of the art. We also outsourced quite a bit to Cygnus Multimedia but they were pricey and it was hard to get the exact results we wanted.

Anyway chalk it up to one more learning experience. Sometimes bizarre shit happens.

Clear Communication

When you are doing any business deal it's really important to be on the same page. Don't make any assumptions about how the other party sees the deal. Be direct and upfront about how you interpret the terms so that there are no misunderstandings. It's not "cool" to sneak some shitty term into a contract.

The reason I bring this up is the very first deal I was involved in. This was at the beginning of Radix where we hooked up with an existing company. The idea is that they would bring in some artists and also help with cashflow. They were also supplying some (pathetic) equipment and space to work (drafty).

So my understanding is that they were going to pay us $20 a hour plus we would both own the project and split the proceeds. This is before I even got the concept of game IP or the long term value that it could have.

Anyway they worked in this old building with dodgy wiring and crap heating. They gave us one computer and a whiteboard to share amongst us. The "pay" would be deferred until we actually made money somehow. Basically it was a total waste of time.

But at the time I didn't see it. One of the other guys was like "wtf are we doing" and refused to participate anymore. It really took that for the rest of us to look at the deal and realize we had no fucking idea what we were really doing there.

Now I was the guy that initially hooked us up with these guys. I had met them through the local BBS scene and their main programmer was a good friend of mine. His business partner on the other hand was this slick car salesman like guy who ran the business. Somehow I also noticed my friend (who did the work) never had any money but this guy had a new car, nicely furnished new place etc. They split up a while later and he got a real job (at I think Cognos).

So we all sit down and write up an actual contract that we think represents the deal that we've already negotiated. We walk in and tell these guys we need to meet and talk about the details and present them with the contract. The biz guy starts reading the first page and is like, "ok, ok, ok" etc. So he looks up and says, "No problem guys this looks fine."

Then Dan says, "What about the second page?" which is where all the meat was.

So car guy looks up and goes, "Second page?" in a bewildered voice, flips the page and starts going, "No! No! No! No! No! Completely unacceptable!" He looks up at me and tells me it's my fault.

That was the last time we were over there. Dan ended up hooking us up with some other guys who actually knew games and they helped us ship Radix. More on that later...

The reason I bring this up is the very first deal I was involved in. This was at the beginning of Radix where we hooked up with an existing company. The idea is that they would bring in some artists and also help with cashflow. They were also supplying some (pathetic) equipment and space to work (drafty).

So my understanding is that they were going to pay us $20 a hour plus we would both own the project and split the proceeds. This is before I even got the concept of game IP or the long term value that it could have.

Anyway they worked in this old building with dodgy wiring and crap heating. They gave us one computer and a whiteboard to share amongst us. The "pay" would be deferred until we actually made money somehow. Basically it was a total waste of time.

But at the time I didn't see it. One of the other guys was like "wtf are we doing" and refused to participate anymore. It really took that for the rest of us to look at the deal and realize we had no fucking idea what we were really doing there.

Now I was the guy that initially hooked us up with these guys. I had met them through the local BBS scene and their main programmer was a good friend of mine. His business partner on the other hand was this slick car salesman like guy who ran the business. Somehow I also noticed my friend (who did the work) never had any money but this guy had a new car, nicely furnished new place etc. They split up a while later and he got a real job (at I think Cognos).

So we all sit down and write up an actual contract that we think represents the deal that we've already negotiated. We walk in and tell these guys we need to meet and talk about the details and present them with the contract. The biz guy starts reading the first page and is like, "ok, ok, ok" etc. So he looks up and says, "No problem guys this looks fine."

Then Dan says, "What about the second page?" which is where all the meat was.

So car guy looks up and goes, "Second page?" in a bewildered voice, flips the page and starts going, "No! No! No! No! No! Completely unacceptable!" He looks up at me and tells me it's my fault.

That was the last time we were over there. Dan ended up hooking us up with some other guys who actually knew games and they helped us ship Radix. More on that later...

Thursday, April 26, 2012

Thred - Three Dimensional Editor

The story of Thred. This is the program that taught me about the emerging power of the internet.

In December of 1995 we shipped Radix: Beyond the Void as a shareware title. I'll write up the story of Radix some other time but basically it didn't sell well and the team broke up. I ended up taking a few months off, going to GDC and meeting Chris Taylor who offered me a job on TA. However, during the time between when he offered me the job and when I started we needed to deal with visa paperwork crap. So I was sitting at my desk in Kanata knowing I had a job with nothing to do until the paperwork came in.

So I did what any programmer would do, I started on a new project. At the time we wrapped up Radix Shahzad had been working on some new engine tech that could render arbitrary 3d environments. During Radix we used a 2D editor I wrote called RadCad to make the levels. But to make 3d environments we had nothing. I really just wanted to learn how to write a fully 3d editor because I knew that was the next step and I was excited to get going.

Let me digress a bit and talk about BSP trees. This was a really hot topic at the time because Carmack had used 2D BSP's in doom. When we initially wrote the Radix engine we used a ray-casting approach but it was just suboptimal compared to the BSP. I somehow managed to shoehorn in a 2D BSP into Radix not long before we shipped so I had an understanding of how they worked.

So I started out writing a simple editor that could draw polygonal shapes with no textures and no shading. Once I had some basic navigation and movement I started to add the ability to CSG the shapes together to build more complex pieces of level. There was a lot of this kind of research going on at the time including Quake which was coming soon.

So to do the 3D CSG that was required I experimented with a ton of different BSP construction methods. The easiest one is to just build a BSP one piece at a time doing the correct operation but it results in pretty bad splits. If you rebuild from scratch you can balance the tree better and improve the result. Anyway the bottom line is that I got it mostly working in about a week or so.

And that probably would have been it if it wasn't for a certain confluence of events. Even though Radix didn't do very well we had a few fans of the game. One of them was a really smart guy named Jim Lowell who pointed out that the editor (which I had shown him) could be used to edit Quake levels. Now Quake wasn't out yet but there was the quake test and all of that so we generally knew how it worked.

The real genius Jim had though was to post this on a web page. Remember, in 1996 the web wasn't that mature yet. I hadn't really grasped how much it was going to change the world. Bottom line, the first version of Quake came out (a test or maybe the shareware something) and I managed to get a level up and running in about 3 hours.

Jim pretty much immediately posted some screenshots of the level and may have actually released a level. This caused quite a stir as people were making quake levels using a text editor at the time. I had just lucked into being the first person in the world with this tech ready to go.

And then I got an email from Carmack. I wish I still had access to my email archives from the Ottawa freenet but I don't. Basically he asked me how I had cracked the protection... or if he had boneheaded the check. I said he must have boneheaded it (he had) because I didn't have to do anything to get it to work. Quake was supposed to need a valid license file before reading 3rd party levels. Oops.

Anyway id was nice enough to send me a burned version of the full game when it was released. I think I still have this somewhere although it may have humungous stickers on it.

This is where the story goes crazy. I started getting massive numbers of emails from people looking to license the editor, have me write a book, work for them etc. Now I was already planning on going to work at Cavedog so I wasn't terribly interested in most of this stuff. But I got interested quickly when people started wanting to pay serious cash for the editor. The power of the internet was revealing itself to me.

The guy that intrigued me the most was someone named Steve Foust from Minnesota. Steve called me and told me he would give me a bunch of money upfront and a back end royalty if I didn't release the editor. He wanted to license it, make a quake level pack while nobody else could and make a killing. He was fine with me releasing it after the pack came out. The company steve was with was called Head Games and it was eventually acquired by Activision.

A book company called me and wanted me to write a book. I'd never considered that and didn't really think it was up my alley. Based on what I understand of the economics of it I probably dodged a bullet as game books don't usually make any money. A lot of publishers will pay extremely low royalties like 10% on books. Bottom line, I was a better programmer than author. I would probably be more into that today but why not just blog if I have something to say?

Anyway I eventually got my paperwork and headed for Seattle. Aftershock for Quake came out and I released the editor to the world. I also licensed it to a bunch of companies including Sierra On-line and Eclipse Entertainment. It also got me an interview at Raven, where they did offer me a job that I declined. Seattle and TA was waiting and a lot more exciting.

All of the licensing was my first real exposure to these kinds of contracts. We had done a shareware publishing deal with Epic before that, but that's pretty much it. I left a lot of money on the table back then because I was a horrible negotiator. I had one of the licensees tell me recently that he probably would have paid me double the amount if I had bothered to hold out at all. This is called paying your dues or stupid tax in Dave Ramsey speak.

Also if you feel like I'm focusing on the financials a lot in this it's because I am. My family wasn't very well off and I had a lot of things I wanted that I was working hard for. Money was definitely a way that I measured my progress towards my goals. As Zig Zigler says success is the progressive realization of a worthwhile goal. I don't know if a Mustang Cobra was a worthwhile goal or not but by October of 1996 I had a brand new black Cobra. That was, by far, the most exciting material thing that I've ever bought. I had been lusting after 5.0 Mustangs for years before I even got my license. Next I needed to get a WA state drivers license but that's another story. Bottom line, Thred is the program that for the first time in my life put me in a financial position that wasn't shit.

Thred is also how I met Gabe Newell for the first time. Not long after Valve was founded I went over there to talk to them and see what they were about. They had already hired Yahn Bernier who wrote the editor BSP as well as Ben Morris who wrote WorldCraft, so they didn't need another editor. We didn't really talk jobs because I was happy working at Cavedog. Our office at Uber today is just down the road from that building where Valve started. Valve today blows my mind btw but... that's another story.

Anyway I think the fan community had a lot higher expectations than I had. To me Thred was just a tool to learn what I need to know about 3D editing. I think the community expected me to make a career of expanding and supporting Thred. In reality I was busy writing new code for my employer and trying to actually create a top level game. Jim really took most of the flack for this, sorry about that Jim.

The fact that the first title I got work on after Radix was Total Annihilation is just an incredible bit of luck. I did pass up other opportunities including more Thred work or even working at Raven because Chris had utterly convinced me that his vision was the next big thing. I was a hardcore C&C fan and I wasn't going to lose the opportunity to work on what I thought was going to be a brilliant game.

Eventually the thought process behind Thred would turn into Eden which was the Cavedog editor for the Amen Engine. But that's another story...

In December of 1995 we shipped Radix: Beyond the Void as a shareware title. I'll write up the story of Radix some other time but basically it didn't sell well and the team broke up. I ended up taking a few months off, going to GDC and meeting Chris Taylor who offered me a job on TA. However, during the time between when he offered me the job and when I started we needed to deal with visa paperwork crap. So I was sitting at my desk in Kanata knowing I had a job with nothing to do until the paperwork came in.

So I did what any programmer would do, I started on a new project. At the time we wrapped up Radix Shahzad had been working on some new engine tech that could render arbitrary 3d environments. During Radix we used a 2D editor I wrote called RadCad to make the levels. But to make 3d environments we had nothing. I really just wanted to learn how to write a fully 3d editor because I knew that was the next step and I was excited to get going.

Let me digress a bit and talk about BSP trees. This was a really hot topic at the time because Carmack had used 2D BSP's in doom. When we initially wrote the Radix engine we used a ray-casting approach but it was just suboptimal compared to the BSP. I somehow managed to shoehorn in a 2D BSP into Radix not long before we shipped so I had an understanding of how they worked.

So I started out writing a simple editor that could draw polygonal shapes with no textures and no shading. Once I had some basic navigation and movement I started to add the ability to CSG the shapes together to build more complex pieces of level. There was a lot of this kind of research going on at the time including Quake which was coming soon.

So to do the 3D CSG that was required I experimented with a ton of different BSP construction methods. The easiest one is to just build a BSP one piece at a time doing the correct operation but it results in pretty bad splits. If you rebuild from scratch you can balance the tree better and improve the result. Anyway the bottom line is that I got it mostly working in about a week or so.

And that probably would have been it if it wasn't for a certain confluence of events. Even though Radix didn't do very well we had a few fans of the game. One of them was a really smart guy named Jim Lowell who pointed out that the editor (which I had shown him) could be used to edit Quake levels. Now Quake wasn't out yet but there was the quake test and all of that so we generally knew how it worked.

The real genius Jim had though was to post this on a web page. Remember, in 1996 the web wasn't that mature yet. I hadn't really grasped how much it was going to change the world. Bottom line, the first version of Quake came out (a test or maybe the shareware something) and I managed to get a level up and running in about 3 hours.

Jim pretty much immediately posted some screenshots of the level and may have actually released a level. This caused quite a stir as people were making quake levels using a text editor at the time. I had just lucked into being the first person in the world with this tech ready to go.

And then I got an email from Carmack. I wish I still had access to my email archives from the Ottawa freenet but I don't. Basically he asked me how I had cracked the protection... or if he had boneheaded the check. I said he must have boneheaded it (he had) because I didn't have to do anything to get it to work. Quake was supposed to need a valid license file before reading 3rd party levels. Oops.

Anyway id was nice enough to send me a burned version of the full game when it was released. I think I still have this somewhere although it may have humungous stickers on it.

This is where the story goes crazy. I started getting massive numbers of emails from people looking to license the editor, have me write a book, work for them etc. Now I was already planning on going to work at Cavedog so I wasn't terribly interested in most of this stuff. But I got interested quickly when people started wanting to pay serious cash for the editor. The power of the internet was revealing itself to me.

The guy that intrigued me the most was someone named Steve Foust from Minnesota. Steve called me and told me he would give me a bunch of money upfront and a back end royalty if I didn't release the editor. He wanted to license it, make a quake level pack while nobody else could and make a killing. He was fine with me releasing it after the pack came out. The company steve was with was called Head Games and it was eventually acquired by Activision.

A book company called me and wanted me to write a book. I'd never considered that and didn't really think it was up my alley. Based on what I understand of the economics of it I probably dodged a bullet as game books don't usually make any money. A lot of publishers will pay extremely low royalties like 10% on books. Bottom line, I was a better programmer than author. I would probably be more into that today but why not just blog if I have something to say?

Anyway I eventually got my paperwork and headed for Seattle. Aftershock for Quake came out and I released the editor to the world. I also licensed it to a bunch of companies including Sierra On-line and Eclipse Entertainment. It also got me an interview at Raven, where they did offer me a job that I declined. Seattle and TA was waiting and a lot more exciting.

All of the licensing was my first real exposure to these kinds of contracts. We had done a shareware publishing deal with Epic before that, but that's pretty much it. I left a lot of money on the table back then because I was a horrible negotiator. I had one of the licensees tell me recently that he probably would have paid me double the amount if I had bothered to hold out at all. This is called paying your dues or stupid tax in Dave Ramsey speak.

Also if you feel like I'm focusing on the financials a lot in this it's because I am. My family wasn't very well off and I had a lot of things I wanted that I was working hard for. Money was definitely a way that I measured my progress towards my goals. As Zig Zigler says success is the progressive realization of a worthwhile goal. I don't know if a Mustang Cobra was a worthwhile goal or not but by October of 1996 I had a brand new black Cobra. That was, by far, the most exciting material thing that I've ever bought. I had been lusting after 5.0 Mustangs for years before I even got my license. Next I needed to get a WA state drivers license but that's another story. Bottom line, Thred is the program that for the first time in my life put me in a financial position that wasn't shit.

Thred is also how I met Gabe Newell for the first time. Not long after Valve was founded I went over there to talk to them and see what they were about. They had already hired Yahn Bernier who wrote the editor BSP as well as Ben Morris who wrote WorldCraft, so they didn't need another editor. We didn't really talk jobs because I was happy working at Cavedog. Our office at Uber today is just down the road from that building where Valve started. Valve today blows my mind btw but... that's another story.

Anyway I think the fan community had a lot higher expectations than I had. To me Thred was just a tool to learn what I need to know about 3D editing. I think the community expected me to make a career of expanding and supporting Thred. In reality I was busy writing new code for my employer and trying to actually create a top level game. Jim really took most of the flack for this, sorry about that Jim.

The fact that the first title I got work on after Radix was Total Annihilation is just an incredible bit of luck. I did pass up other opportunities including more Thred work or even working at Raven because Chris had utterly convinced me that his vision was the next big thing. I was a hardcore C&C fan and I wasn't going to lose the opportunity to work on what I thought was going to be a brilliant game.

Eventually the thought process behind Thred would turn into Eden which was the Cavedog editor for the Amen Engine. But that's another story...

Playoff Hockey

Well the Senators are officially out again and this may be Alfie's last game. Oh well, I guess this means I don't have to watch any more hockey and can get back to concentrating on work.

Wednesday, April 25, 2012

Dependency Injection

Does anyone else really enjoy writing code using straight up injection? Some of the work I've been doing recently I've completely avoided global variables and inject every bit of state. It naturally helps multithreading but it has a lot of other nice things going for it as well. Global variables are just poison. Sometime I'll go into some detail on the advantages that the MB engine has because of it.

Total Annihilation Graphics Engine

For a long time I've wanted to spend some time writing down my recollections

of what I did on the TA graphics engine. It was a weird time, just before

hardware acceleration showed up. Early hardware acceleration had pretty

insane driver overhead. For example the first glide API did triangle setup

because the hardware didn't have it yet. Accelerated transform was out of

the question. Anyway none of this was really a factor because that

stuff was just showing up when we were working on TA and we couldn't have sold

any games on it.

Anyway I met Chris at GDC in 1996 and he fairly quickly offered me a job working on the game. I had just wrapped up work on Radix a few months before and was looking for something new since most of the Radix guys were going back to school.

So I went back to Ottawa and while I waited for visa paperwork to move to the states I ended up writing Thred which became a whole other story that I'll talk about some other time. Once the visa paperwork came through I moved to Seattle at the end of July 1996 just in time for Seafair.

Monday morning rolls around and I start meeting my new co-workers and getting the vibe. I got a brand new smoking hot Pentium 166Mhz right out of a Dell box. Upgraded to 32mb of ram even! That was the first time I ever saw a DIMM incidentally. We all ooo'd and ahhh'd over this new amazing DIMM technology. I was super excited to be there and actually getting paid too!

I had already done a bit of work remotely so I had a little bit of an idea about the code but I hadn't seen the whole picture. The engine was primarily written in C using mostly fixed point math. At that point using floats wasn't really done but it made sense to start using them. So we did. This means we ended up with an engine that was a blend of fixed point and floating point, including a decent amount of floating point asm code. Ugh. Jeff and I both tried to rip out the fixed point stuff but it was ingrained too deep. Oh well.

So my primary challenge on the rendering side was to increase the performance of the unit rendering, improve image quality and add new features to support the game play.

The engine was also very limited graphically in a lot of ways because it was using an 8-bit palette. This meant I had to use a lot of lookup tables to do things like simple alpha blending. Even simple Gouraud shading would require a lookup table for the light intensity value. Nasty compared to what we do today. The artists did come up with a versatile palette for the game but 256 colors is still 256 colors at the end of the day.

Getting all of the units to render as real 3d objects was slow. Basically all of the units and buildings were 3d models. Everything else was either a tiled terrain backdrop or what we called a feature which was just an animated sprite (e.g. trees).

So there were a few obvious things to do to make this faster. One of them was to somehow cache the 3d units and turn them into a sprite which could be rendered a lot more quickly. For a normal unit like a tank we would cache off a bitmap that contained the image of the tank rendered at the correct orientation (we call this an imposter today, look up talisman). There was a caching system with a pool that we could ask to give us the bitmap. It could de-allocate to make room in the cache using a simple round robin scheme. The more memory your machine had the bigger the cache was up to some limit. We would store off the orientation of that image and then simply blt it to the screen to draw the tank. If a tank was driving across flat terrain at the same angle we could move the bitmap around because we used an orthographic projection. Units sitting on the ground doing nothing were effectively turned into bitmaps. Wreckage too.

There was another wrinkle here; the actual units were made from polygons that had to be sorted. But sometimes the animators would move the polys through each other which caused weird popping so a static sorting was no good. In addition it didn't handle intersection at all. So I decided to double the size of the bitmap that I used and Z-buffer the unit (in 8-bits) only against itself. So it was still turned into a bitmap but at least the unit itself could intersect, animate etc without having worry about it. I think at the time this was the correct decision and actually having a full screen Z-buffer for the game probably also would have been the correct decision (instead we rendered in layers).

Now all of this sounds great but there were other issues. For example a lot of units moving on the screen at the same time could still bring the machine to its knees. I could limit this to some extent by limiting the numbers of units that got updated any given frame. For example rotation could be snapped more which means not every unit has to get rendered every frame. Of course units of the same type with the same transform could just use the same sprite. Even with everything I could come up with at the time you could still worst case it and kill performance. Sorry! I was given a task that was pretty hard and I did my best.

Once I had all the moving units going I realized I had a problem. The animators wanted the buildings to animate with spinney things and other objects that moved every frame! The buildings were some of the most expensive units to render because of their size and complexity. By even animating one part they were flushing the cache every frame and killing performance. So I came up with another idea. I split the building into animating and non-animating parts. I pre-rendered the non-animating parts into a buffer and kept around the z-buffer. Then each frame I rendered just the animating parts against that base texture using the z-buffer and then used the result for the screen. I retrospect I could have sped this up by doing this part on the screen itself but there were some logistical issues due to other optimizations.

After I had the building split out, the animating stuff split out, the z-buffering and the caching I still had a few more things I needed to do. I haven't talked about shadows at all. Unit shadows and building shadows were handled differently. Unit shadows simply took the cached texture and rendered it offset from the unit with a special shader (shader haha it was a special blt routine really) that used a darkening palette lookup. E.g. if there was anything at that texel just render shadow there like an alpha test type deal. This gave me some extra bang for the buck in the caching because I had another great use for that texture and I think the shadows hold up well.